Increasing Maximum Plant Iterations reduces Run Time

A counter-intuitive thing happened to my model. I want to ask if others have experienced it as well, and hopefully I want to know what the reason is.

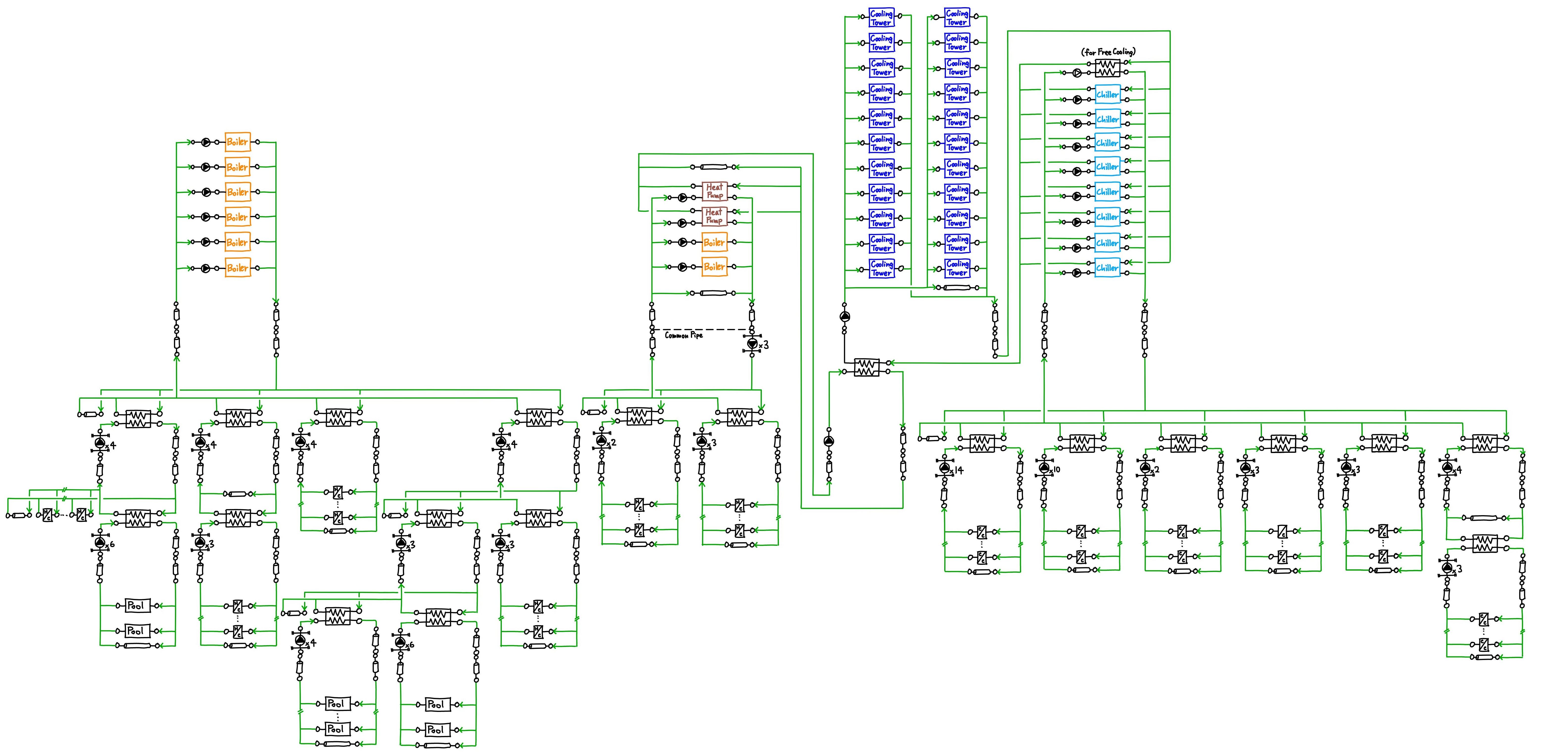

My model has complex plant loops (The schematic below is just for reference. The details of the plant loops are not the point of my problem).

According to I/O Reference, Maximum Plant Iteratins in ConvergenceLimit "can be raised for better accuracy with complex plants or lowered for faster speed with simple plants." I tried changing it from the default "8" to "80". As a result, the simulation run time reduced a bit, which is counter-intuitive and contrary to what I/O Reference describes.

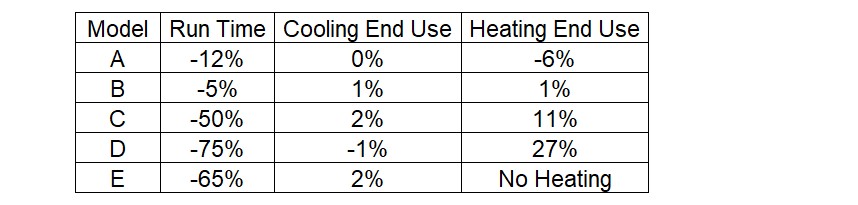

I tested with my other models with different plant systems. The summary table is as follows.

Run Time tends to decrease, but I don't know why. Does anyone know why this happened? Or Has anyone changed Maximum Plant Iterations? What is the result? For now, I try to use the default values for ConvergenceLimit.

When we examined the impact of various inputs when creating the PerformancePrecisionTradeoffs input object, we sometimes founds trends that were not as expected. This one is certainly interesting.In general, since there are multiple parts of EnergyPlus that are all iterating toward a solution each time step, if one part arrives at a solution that takes more iterations, it might mean that another part is not iterating much at all.