Dehumidification Scheme Subcools Too Low?

Hello all!

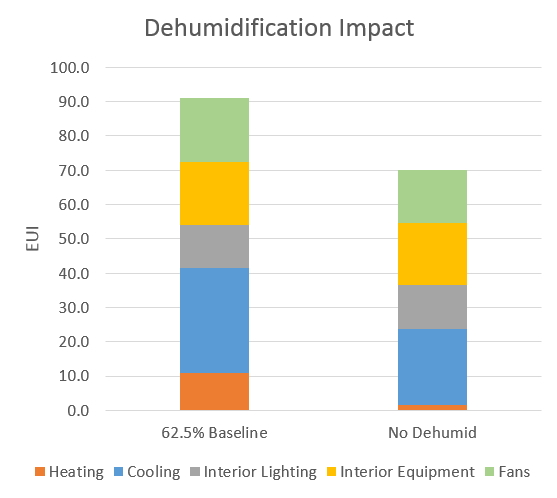

I'm attempting to model dehumidifcation in EnergyPlus and I'm getting some strange results. When using a constant 62.5% Relative Humidity setpoint versus no dehumidification in New Orleans LA, the EUI jumps from 70 kBtu/sf-yr to 91 kBtu/sf-yr. Cooling energy increased by about 30%, and heating increases by a factor of 5.6!

The project is a 400 square foot classroom "shoebox" that I'm running some infiltration sensitivity analysis on. Thus, I want to make sure I'm capturing the energy impact of dehumidification accurately. I'm using the HVACTemplate:System:Unitary template (everything is autosized), and I've input "CoolReheat" in the "! - Dehumidification Control Type" field. I've added a "ZoneControl:Humidistat" object that uses a compact schedule with a "percent" schedule type limits that uses 62.5 all year. After inspecting the expanded file, the AirLoopHVAC:Unitary:Furnace:HeatCool object has a "CoolReheat" designation in the "! - Dehumidification Control Type" and an additional reheat coil appeared. Everything looks good.

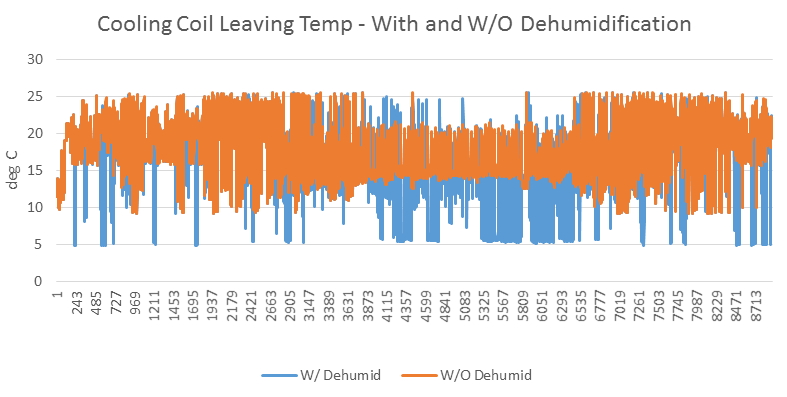

So, why is the reheat and cooling energy increase so high? The scheme seems to be working, only 6% of all hours are above 62.5% relative humidity. I think the main problem is that with dehumidification, the cooling coil drops the temperature to 5 deg C (42 deg F) in some cases (see below graph). This seems unreasonable, and may be why there is so much reheat and coling energy, as actual systems would never really drop it below 55 deg F. I don't know how to control this, and I can't find a setpont manager that controls the subcooling routine for the dehumidification.

There appears to be a "!-minimum supply air temperature" field in the "SetpointMnager:SingleZone:Reheat" object set at 13, but it doesn't seem to have an effect.

Is there a way to control this? Am I missing anything?

Here is a link to the .idf in case it helps. https://drive.google.com/file/d/0B6aA...

Thanks ahead of time for your help!