File structure comparison of OS measures run on Desktop vs OS server

I am writing a reporting measure which will extract a number of outputs from the sql file in addition to extracting the inputs which the user specified in the measures applied to the osm model in each particular Energy Plus run.

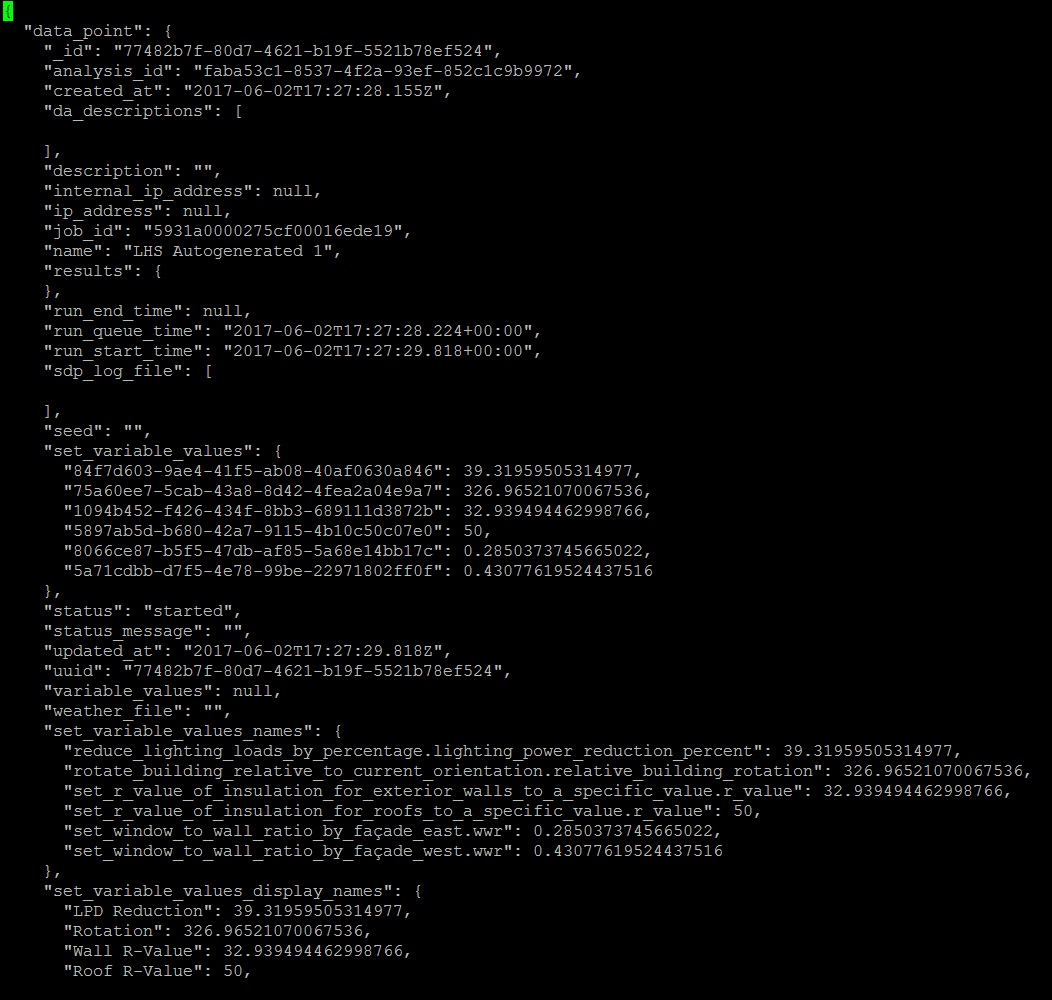

I have therefore tried to write some code which will extract the latter from each Energy Plus run. When using OpenStudio server this is very easy as there is a file produced called - data_point.json.

It is extremely easy to query the inputs made by the user from this file as can be seen from the attached screenshot, you simply have to call set_variable_values_names.

However when I run simulations on the desktop using the OpenStudio Application, the file data_point.json is not produced so it is not possible to use the same code on both the desktop and the server.

Instead on the desktop I have to query the file data_point_out.json which is not as clean and easy as using the set_variable_values_names section in data_point.json.

Why is there a difference between the desktop and the server? How can I produce the file data_point.json on the desktop?

One other difference that I noticed is that file workflow.osw does appear on the desktop but not on the server.