Hi, I have a python script where I am trying to run thousands of simulations in a sequential fashion. I am making a copy of the whole energyplus directory and instantiating an API object each time. while I keep a reinforcement learning agent in a separate object interacting with the energyplus wrapper object for each run.

After certain number of runs I am always hitting the error of "Too many open files" or Energy plus itself not being able to access a file because it has hit the OS limit for file handles in a single process.

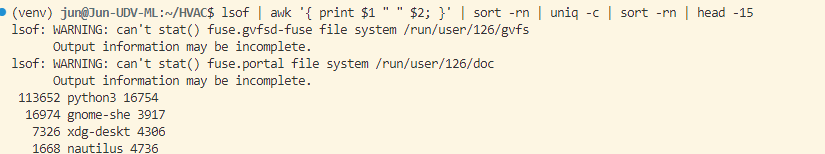

If I run the command lsof | awk '{ print $1 " " $2; }' | sort -rn | uniq -c | sort -rn | head -15 in linux I find that my python3 process keeps opening handles as the execution time passes.

If I try listing the open file handles I can see it's a bunch of out files from energyplus which I am manually deleting but the handle remains attached to the python process, even with the file deleted.

I saw one possible solution is to increase the maximum number of handles available for a single process in Linux, however this is not a scalable solution since I am planning to run even millions of simulations in a single run.

Is there a way of closing the file handles opened by the energyplus simulation? Any idea of a different approach for this would also be widely appreciated.

Thanks.