Dear,

I'm developing new Reinforcement Learning Control Strategies and I'm using E+ as a test bed for the algorithms. I managed to use only

EnergyManagementSystem:Actuator,

t_in_air, !- Name

VAV SYS 1 OUTLET NODE, !- Actuated Component Unique Name

System Node Setpoint, !- Actuated Component Type

Temperature Setpoint; !- Actuated Component Control Type

as an actuator to optimize the Energy Consumption in the building by varying the temperature. Since I will like to give more control actions I have started to use the mass flow rate and I have created the following actuator:

EnergyManagementSystem:Actuator,

mdot_fan, !- Name

SUPPLY FAN 1, !- Actuated Component Unique Name

Fan, !- Actuated Component Type

Fan Air mass Flow Rate; !- Actuated Component Control Type

Using the ExternalInterface:Variable, EnergyManagementSystem:ProgramCallingManager and EnergyManagementSystem:ProgramI pass and set my control actions:

EnergyManagementSystem:ProgramCallingManager,

act_calls1, !- Name

InsideHVACSystemIterationLoop, !- EnergyPlus Model Calling Point

Act; !- Program Name 1

EnergyManagementSystem:Program,

Act, !- Name

SET t_in_air = tair, !- Program Line 1

SET mdot_fan = mdot; !- Program Line 2

ExternalInterface:Variable,

mdot, !- Name

1; !- Initial Value

ExternalInterface:Variable,

tair, !- Name

1; !- Initial Value

I have tried with both AirTerminal:SingleDuct:ConstantVolume:NoReheat and AirTerminal:SingleDuct:VAV:Reheat.

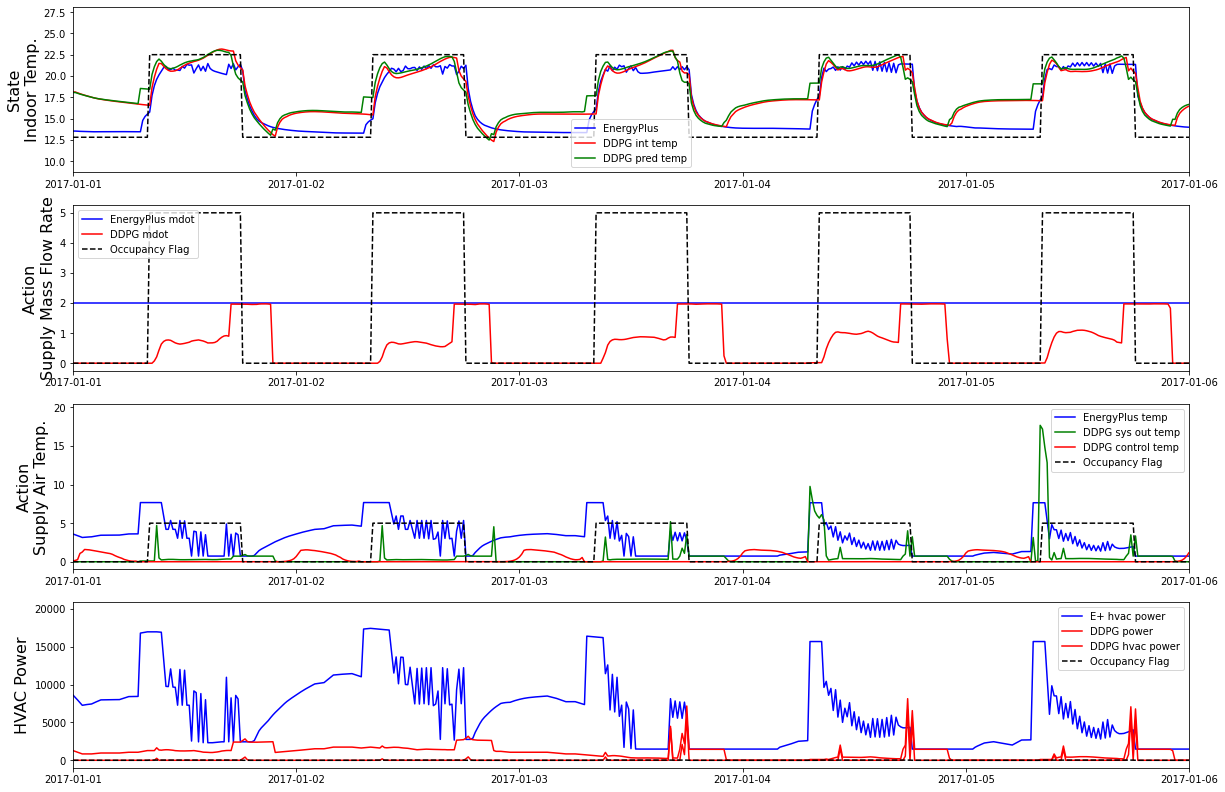

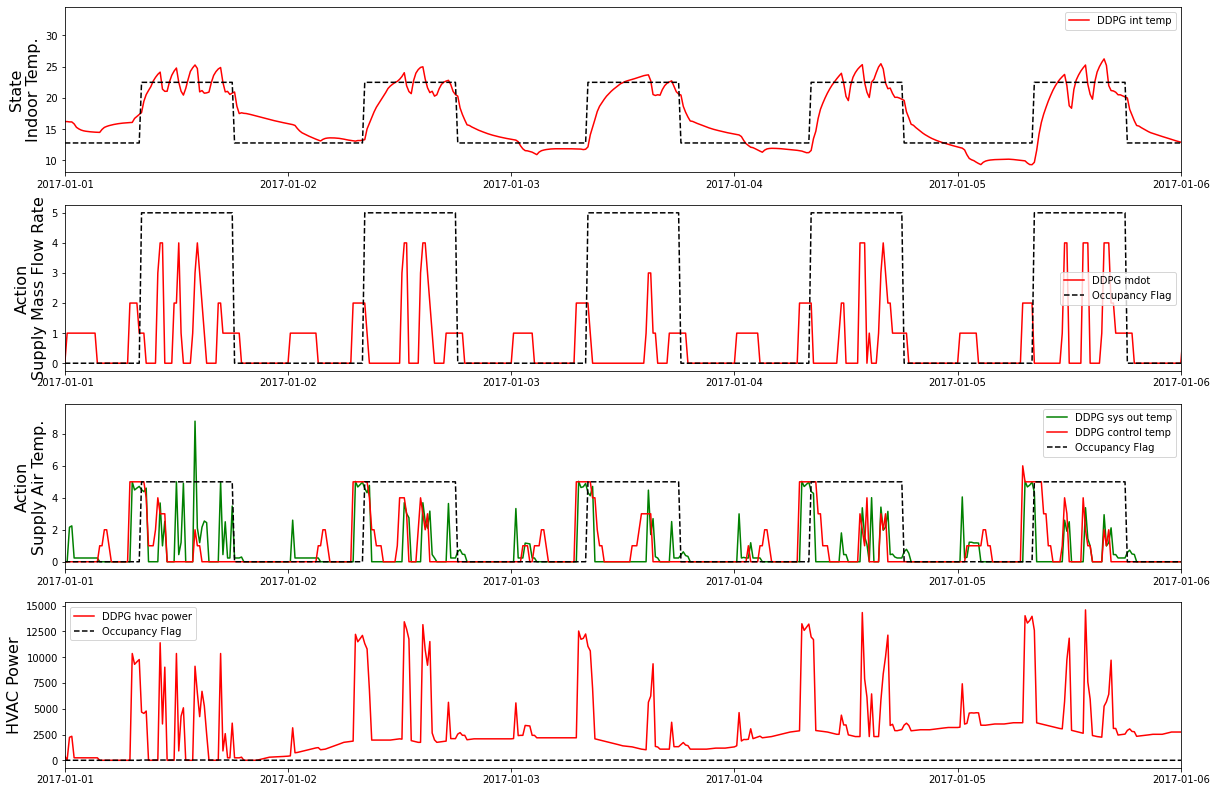

With AirTerminal:SingleDuct:ConstantVolume:NoReheat the RL algorithm discovers a strange behaviour of E+ that makes the temperature to follow the E+ base line in blue but the HVAC consumption is almost 0

With AirTerminal:SingleDuct:VAV:Reheat the VAV SYS 1 OUTLET NODE it does not flow the controls that I pass to E+ (in green what E+ does in red what I wanted to do).

At the links you find the zips with the IDF, the control_actions and the E+ output for both cases.

https://www.dropbox.com/s/ektupyzrxaqbue7/VAV_costantVolume.zip?dl=0

https://www.dropbox.com/s/ev9ofl1wptpx0i5/VAV_reheat.zip?dl=0