My general question is related to designing a low-cost/high-throughput E+ computation server (Linux OS) that would focus on running many simulations in parallel. For those familiar with server design, I'm thinking commodity computing versus high performance computing. We run a LOT of simulations at my company. In addition to existing hardware, we're also using web-based resources. Despite the convenience of web-based computing, I'm not certain current options are cost effective at large scale.

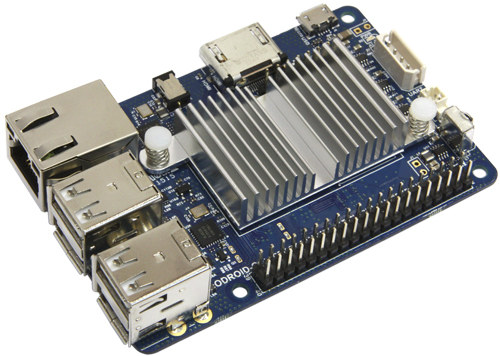

Related questions: 1) Is anyone aware of studies related to E+ performance on different hardware? (e.g. AMD vs. Intel, memory, CPU clock speed vs. # of cores) 2) Has anyone conducted E+ simulations on ARM architectures? If so, what was your experience?

Obligatory Star Wars quote: Vader: The Emperor does not share your optimistic appraisal of the situation. Other Guy: But he asks the impossible! I need more men!