I am not sure if I am way out of the ball park here but I thought I would at least ask this question to glean the community's thoughts on the topic. I do not have a sufficient background in lighting to realize if I am missing something fundamental here so please correct me where I am wrong and point out any flaws in my idea.

I work as a programmer/building physics consultant for a large Architectural firm, I am finding that a great deal of time is spent building Rhino models to undertake glare analysis in Diva and then even more time to understand them (on the designer's side).

With virtual reality technology advancing steadily it has become apparent to me that it would be fair more intuitive and straight forward to have the building designer experience the space and day lighting affects first hand.

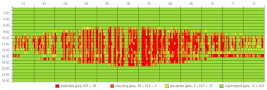

The computer could identify and rank the potential times of the year where glare could occur (something like the Daysim annual glare display)

and display them on an interactive interface within the virtual reality space so that the user could toggle between the times of the year when glare is an issue and experience them first hand.

Would something like this be possible in VR? Is there a gaming engine that accurately simulates real lighting affects and glare? I know this is theortically possible but is it too computationally intensive in VR is this why it hasn't been done?

Please let me know your thoughts,