Unwanted sub-timestep callbacks in EMS (Python API)

Hello,

I am running some test scripts with the EMS Python API and am seeing redundant callbacks for each simulation timestep. It doesn't happen entirely throughout the simulation, however, but still quite often. My understanding is there should only be one unique callback per timestep.

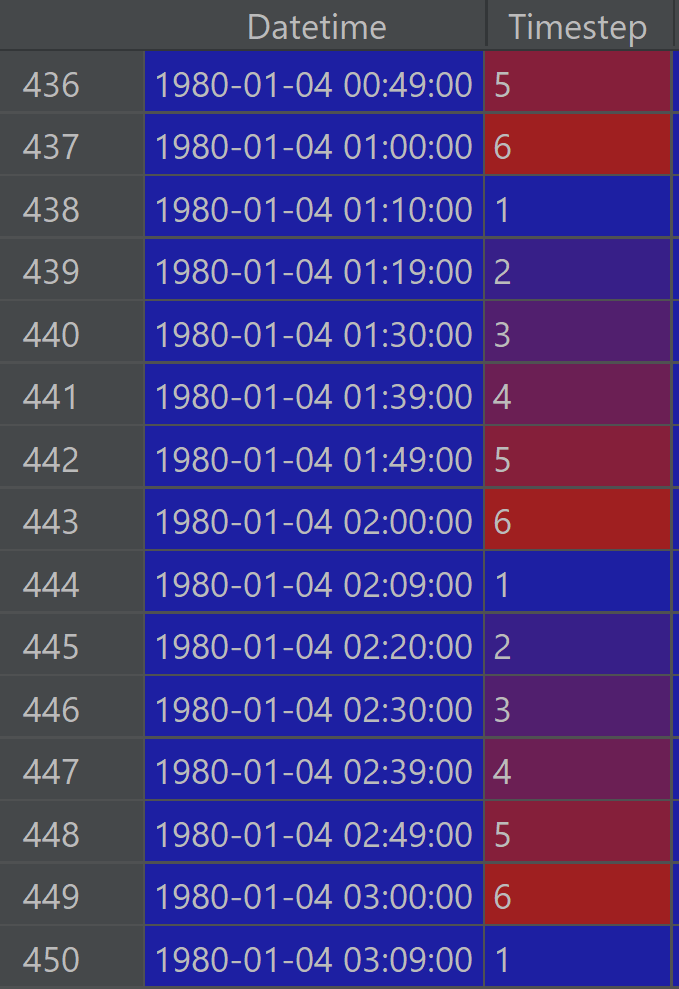

It is better to explain this visually. This model has 6 timesteps/hour and the calling point is somewhat arbitrarily "Callback After Predictor After HVAC Managers".

Below, is a dataframe snippet from the original simulation when I noticed my issue. You can see the first hour is normal, but the rest seem to be running my callback function more frequently than desired. And you can see the Datetime is messed up, going backward occasionally (yellow highlight). Although, the data is the same for these sub-timesteps luckily.

For a 365-day simulation, this resulted in 80,198 callbacks. Way more than (6 timesteps/hr) * (24 hr/day) * (365 day) = 52,560 callbacks/timesteps expected.

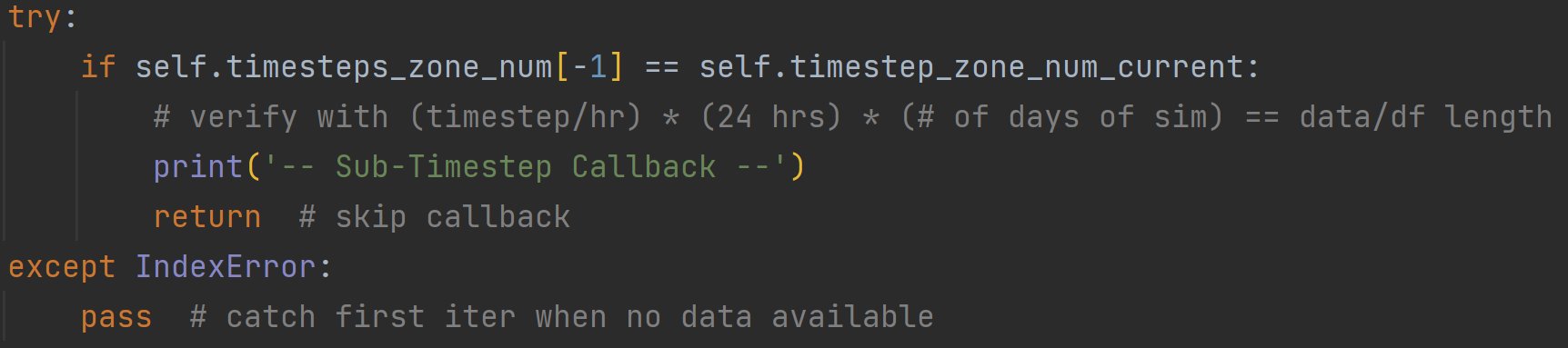

To fix this, I added this bit of Python code to catch when the read timestep is the same as the previous one, then ignore that callback.

This showed good results, with chronological Datetime, no redundancy, and 52,560 callbacks as seen below.

However, I am not confident this is a solution. I am curious why I was experiencing this redundant callbacks / sub-timesteps in the first place, and if this means there are any bugs with my model or the API?

Thank you

I think this may have to do with my choice of calling point. I got different number of redundant callbacks depending on my calling point. With little knowledge of the solving algorithms at each zone and system timestep, I wonder if the predictor-corrector iteration has something to do with it.