How does one correct errors related to truncated images ?

I was trying to understand the new photon mapping extension and tried rendering a bunch of views using the RAD program.

My settings were:

QUALITY= H

DETAIL= H

VARIABILITY= high

INDIRECT= 1

PENUMBRAS= true

RESOLUTION= 1844 863

ZONE= I 0 60 0 60 -1.5873 4.00685

EXPOSURE= 1

render= -ad 10000

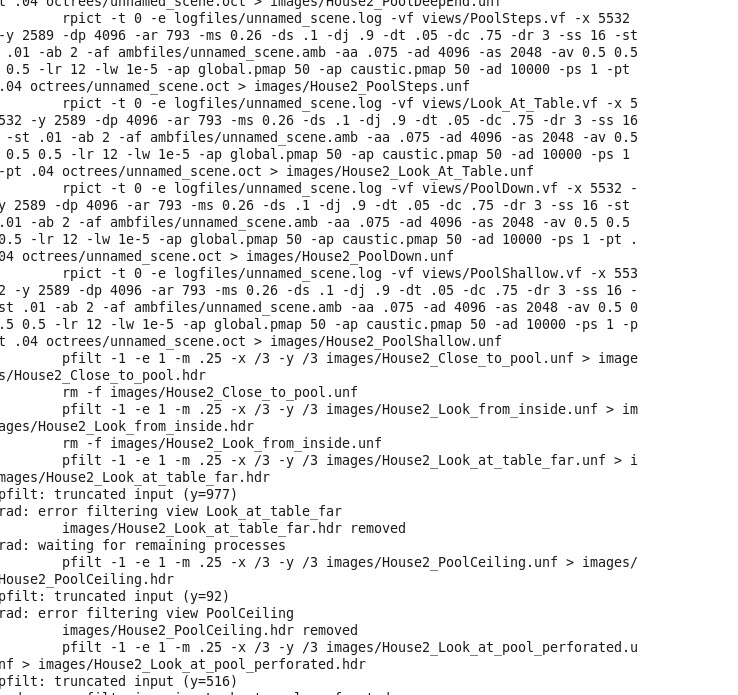

I ran into errors after the images finished rendering. A screenshot of my terminal is below. Error messages are in the lower part of the image:

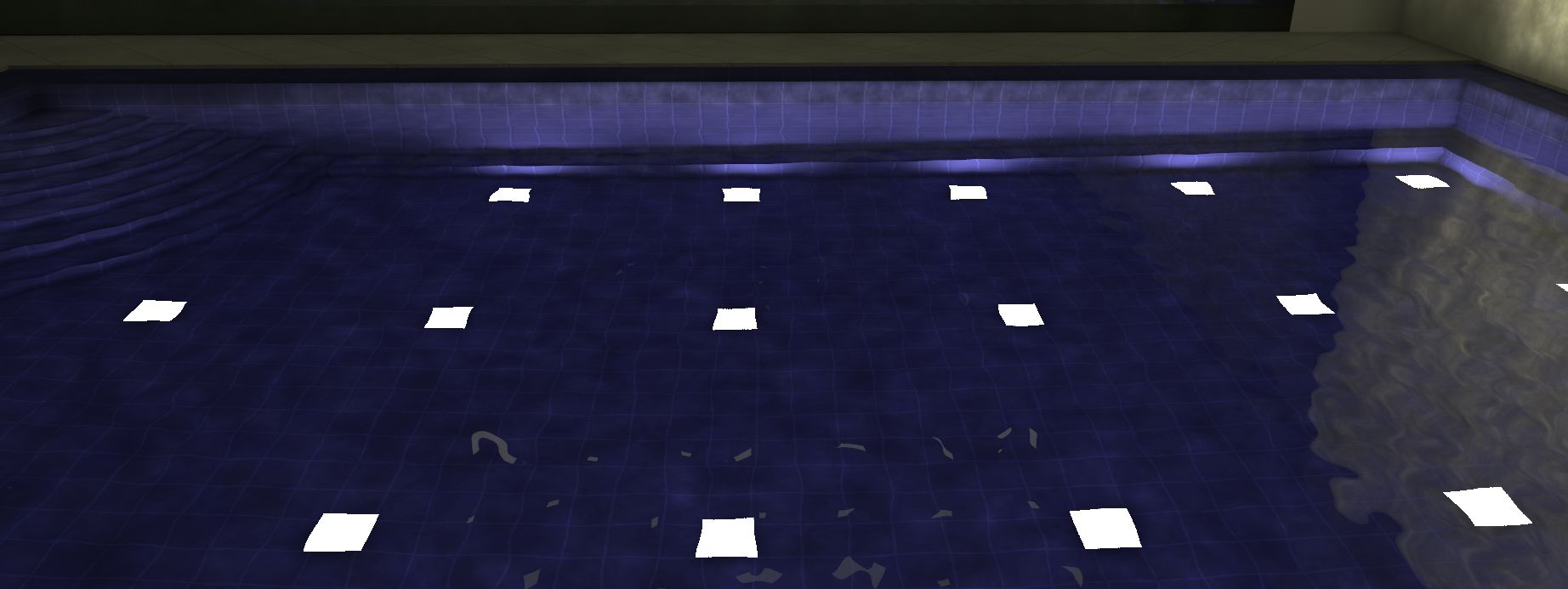

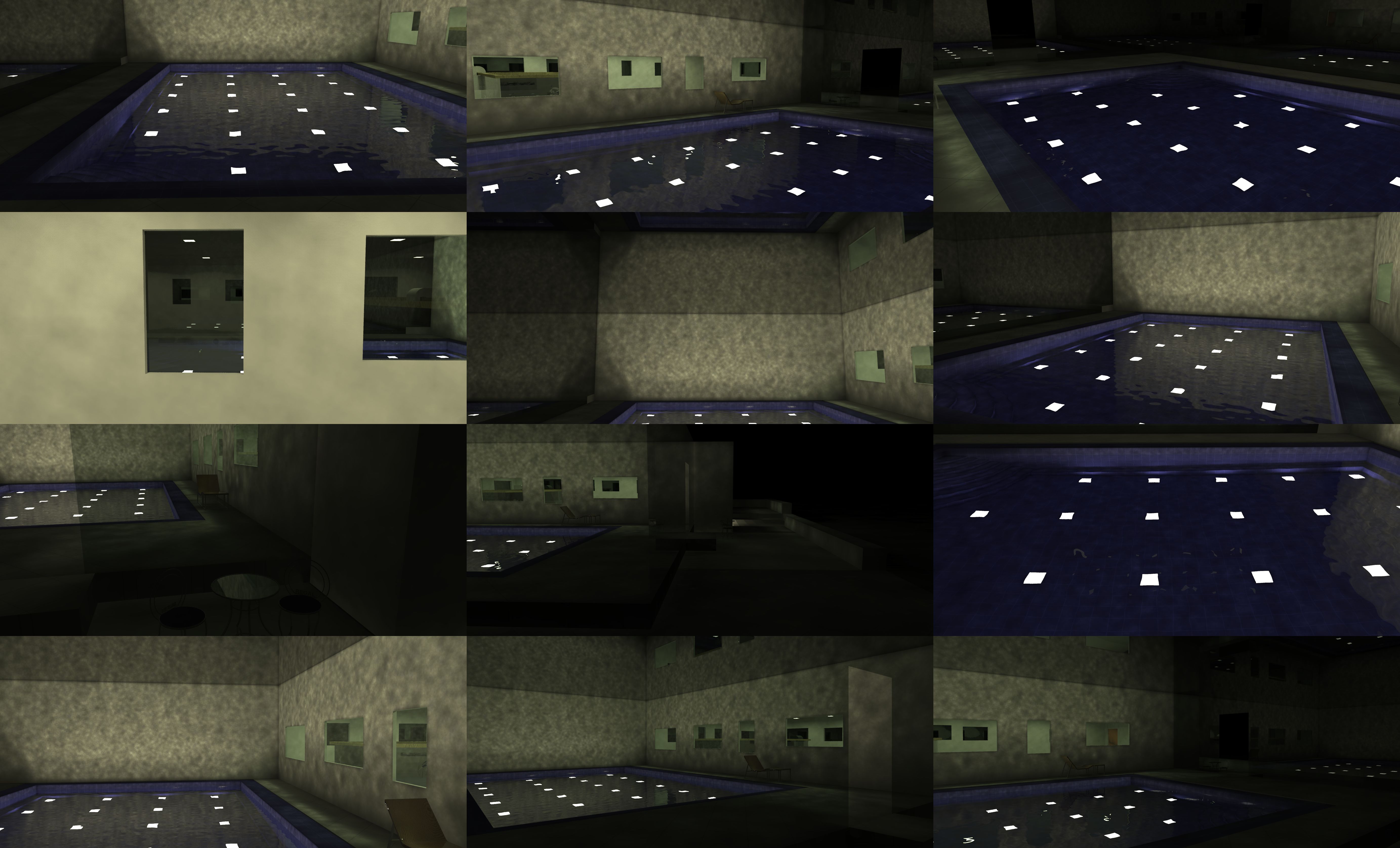

I tried running pfilt on the images and found that almost all the images that were unfinished were truncated in the vertical axis. Some of the images, all of which are supposed to be of the same dimensions, are below:

I got pfilt: warning - partial frame (70%)on all the images where the % range was between 50% to 96%. I googled for 'pfilt partial error' but did not really find anything anywhere except here http://arch.xtr.jp/radiance/tips.htm. Is there anything that I can do to avoid this error in the future ?

The errors/warnings from the logfile are below:

rpict: 289913 rays, 6.90% after 0.001u 0.000s 15.973r hours on hammer12.hpc.rcc.psu.edu (PID 19734)

rpict: signal - Terminate

rpict: 1003391 rays, 31.03% after 0.004u 0.000s 15.976r hours on hammer12.hpc.rcc.psu.edu (PID 19649)

rpict: 464166 rays, 13.79% after 0.002u 0.000s 15.968r hours on hammer12.hpc.rcc.psu.edu (PID 19859)

rpict: signal - Hangup

rpict: 289913 rays, 6.90% after 0.001u 0.000s 15.973r hours on hammer12.hpc.rcc.psu.edu (PID 19734)

rpict: signal - Hangup

rpict: 1003391 rays, 31.03% after 0.004u 0.000s 15.976r hours on hammer12.hpc.rcc.psu.edu (PID 19649)

Update (10/01): The issue, as everyone pointed out, was with my rpict renderings getting killed. I ran the renderings with lower settings and everything worked out fine.

This problem stems from rpict terminating before finishing. Are there any errors in the log (logfiles/unamedscene.log)?

Andy, I have updated my question with the error messages that I found. There were a whole lot warnings due to vertices being non-planar. I haven't pasted those above.

I had earlier rendered the same views with gensky and that worked out fine. Do the errors have anything to do with the fact that my luminaires were underneath a dielectric material ? The surface normals of the dielectric were facing outwards i.e away from the luminaires.

electric lights underwater will take longer to run than with a sky, but shouldn't be a problem. It looks like all the rpict processes were killed after 16 hours. You can continue the rendering where it left off with rpict's -ro option (see the man page).

It would be nice to figure out why everything stopped. What system are you running this on? Is it possible that there is a time limit on a running process?

I wasn't aware about the -ro option. I have the directory structure intact, so I will try and run the renderings from where it got truncated.

Radiance was compiled from source through the NREL repository on github. As far as I know there isn't a time limit on processes but I could be wrong about that. I was asking about the materials because the error log shows that in one case only 6.9 % rays were traced after nearly 16 hours.

Update: I was running mulitple simulations at the time so I got the systems mixed up in the last comment.. the renderings were run on a linux cluster..details above